About the Reading Group

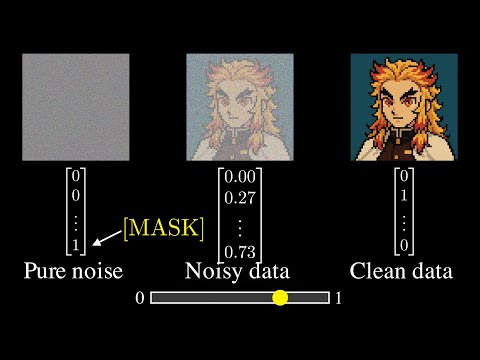

Diffusion LLMs are faster, more controllable successors to traditional LLMs and are rapidly gaining adoption. This reading group aims to build a community for exchanging and debating emerging ideas in this space. While our primary focus is discrete diffusion models for language, we also invite work that extends these methods to other modalities and applications—such as molecular design, drug discovery, and beyond. Each session features an author-led presentation followed by Q&A, with recordings shared on our YouTube channel.

Paper Discussions

Authors present their work followed by discussions and Q&A sessions

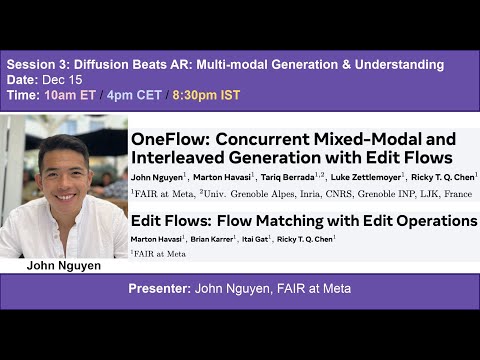

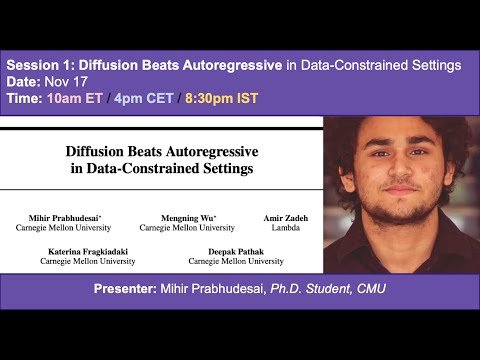

Recorded Sessions

All sessions are recorded and available on YouTube

Community

Stay informed through our email list and Twitter/X

Meet the Organizers

Subham Sahoo

Holds a Ph.D. from Cornell Tech, where he specialized in Diffusion Language Models. He has made foundational contributions to the field, with his work deployed at scale by Google, NVIDIA, and ByteDance across language generation and drug discovery.

Justin Deschenaux

PhD student in Machine Learning at EPFL, advised by Prof. Caglar Gulcehre. Previously interned at Apple MLR. His research interests include diffusion language models, fast generative models, and generalization.

Upcoming Session

December 22, 2025

DiffuCoder: Understanding and Improving Masked Diffusion Models for Code Generation

Shansan Gong will present DiffuCoder and discuss how diffusion language models enable global planning and iterative refinement for code generation.

Time: Dec 15 (Monday) · 10 AM ET / 4 PM CET

Meeting link: click here

Papers: DiffuCoder · DiffuLlama

Abstract: Diffusion language models (DLMs) generate text by denoising an entire sequence, enabling global planning and iterative refinement beyond standard autoregressive decoding. Building on DiffuLLaMA, an approach that adapts pretrained AR models into scalable diffusion models, DiffuCoder brings this paradigm to code by analyzing decoding trajectories (including flexible causality and temperature-dependent generation order) and introducing an RL method, coupled-GRPO, that reduces variance. With a 7B model trained on 130B code tokens, coupled-GRPO improves code generation performance (e.g., +4.4% on EvalPlus) and reduces reliance on AR-biased decoding.

Latest Sessions

View All Sessions

Latest Relevant Videos

View All Videos

Stay Updated

Join our community and never miss a session