S6 | TiDAR: Think in Diffusion, Talk in Autoregression

Jingyu Liu will discuss TiDAR, a hybrid decoding approach that combines diffusion-style parallel drafting with autoregressive verification for high quality and high throughput.

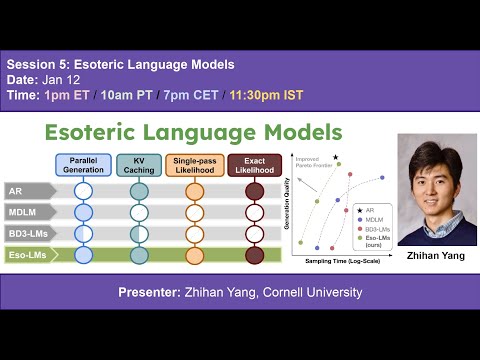

S5 | Esoteric Language Models

In this talk, Zhihan Yang presents Eso-LMs, which unifies AR and diffusion language models. Eso-LMs enable exact likelihoods and KV caching while preserving parallel generation.

S4 | DiffuCoder: Understanding and Improving Masked Diffusion Models for Code Generation

In this talk, Shansan Gong will present DiffuCoder and discuss how diffusion language models enable global planning and iterative refinement for code generation.

S3 | OneFlow: Concurrent Mixed-Modal and Interleaved Generation with Edit Flows

In this talk, John Nguyen presents OneFlow, a non-autoregressive multimodal model for concurrent text and image generation.

S2 | Peptune: De Novo Generation of Therapeutic Peptides with Guided Discrete Diffusion

In this talk, Sophia Tang shows how discrete diffusion enables more controllable and efficient molecule generation.

S1 | Diffusion Language Models beat AR in data constrained regime

In this talk, Mihir Prabhudesai shows that diffusion LLMs excel in such settings by extracting more information from limited data.